Cosine similarity in search engine optimization

What is cosine similarity?

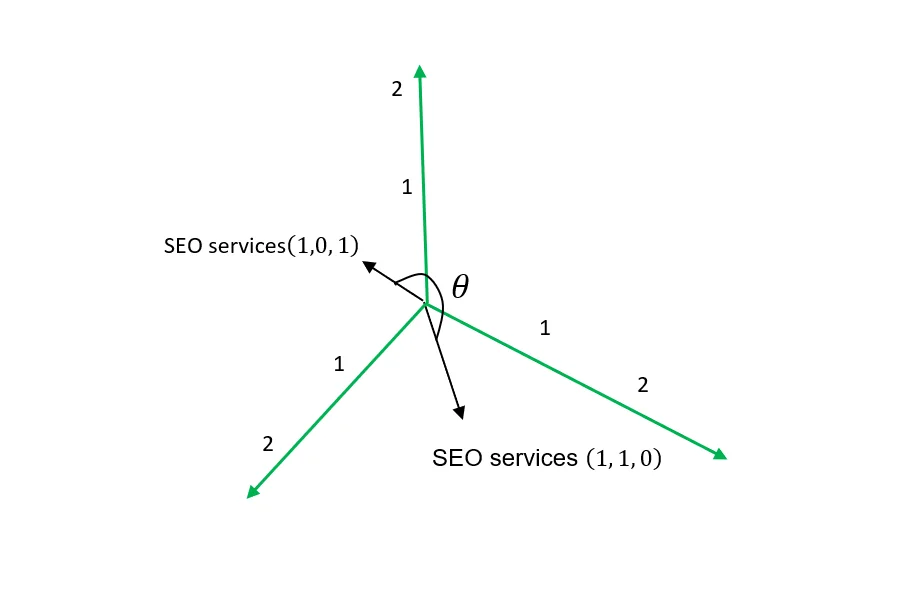

This is the cosine measurement of an angle between non-zero vectors to determine how closely similar they are i.e vector A and vector B having an angle between them, the cosine similarity will be the .

The mathematical formula

Doing the math above manually would be hectic or nearly impossible in real-world scenarios with high-dimensional vector spaces.

The manual math is also weak for short keywords because it ignores important factors such as weighting of words and subword relationship. This is where word embedding comes in.

Word embedding is the computational implementation of the distributional hypothesis, where computers use natural language processing (NLP) to convert words in a corpus into numerical vectors to understand their semantic meaning and relationships.

There are many tools and libraries for word embedding, such as Word2Vec, developed by Google researchers. Word2Vec uses a continuous bag-of-words (CBOW) and skip-gram approach to learn static word representations based on context.

Implementing this technique is practical because Google has been using it for ranking pages on their search engine.

Implementing word embedding

I have tried using both Word2Vec and Transformer-based embedding models, but I eventually settled on the Transformer-based approach because I had access to a pretrained transformer model

all-MiniLM-L6-v2 that encodes phrases efficiently.

So, how do you get the generated word embeddings?

For example, suppose I want to extract keywords from competitors in Kenya who offer SEO services, determine the similarities between these keywords, and use them to optimize my page.

To save time and effort, I have already written a Python script using a Transformer-based embedding model.

Script to generate keyword embeddings

The script extracts keywords from competitor websites, generates embeddings using a Transformer-based model, and calculates cosine similarity between the keywords.

import requests

import re

import nltk

import pandas as pd

from bs4 import BeautifulSoup

from nltk.util import ngrams

from collections import Counter

from sentence_transformers import SentenceTransformer, util

nltk.download("punkt")

urls = {

"competitor_1": "https://kenseo.co.ke/",

"competitor_2": "https://artlydigitalmarketing.co.ke/top-seo-agency-in-kenya/",

"competitor_3": "https://www.seosmart.co.ke/",

}

MIN_PHRASE_FREQ = 2

MIN_PHRASE_LEN = 12

NGRAM_RANGE = (2, 4)

def fetch_clean_text(url):

headers = {"User-Agent": "Mozilla/5.0"}

html = requests.get(url, headers=headers, timeout=15).text

soup = BeautifulSoup(html, "lxml")

for tag in soup(["script", "style", "nav", "footer", "header", "noscript"]):

tag.decompose()

text = soup.get_text(separator=" ")

text = re.sub(r"\s+", " ", text)

return text.lower()

def extract_phrases(text, min_n=2, max_n=4):

tokens = re.findall(r"[a-zA-Z]+", text)

phrases = []

for n in range(min_n, max_n + 1):

phrases.extend([" ".join(g) for g in ngrams(tokens, n)])

return phrases

all_phrases = []

for name, url in urls.items():

text = fetch_clean_text(url)

phrases = extract_phrases(text, *NGRAM_RANGE)

all_phrases.extend(phrases)

print(f"Processed: {name}")

phrase_counts = Counter(all_phrases)

keywords = [

phrase for phrase, count in phrase_counts.items()

if count >= MIN_PHRASE_FREQ and len(phrase) >= MIN_PHRASE_LEN

]

model = SentenceTransformer("all-MiniLM-L6-v2")

embeddings = model.encode(keywords, convert_to_tensor=True)

cosine_matrix = util.cos_sim(embeddings, embeddings)

similarity_df = pd.DataFrame(

cosine_matrix.cpu().numpy(),

index=keywords,

columns=keywords

)

similarity_df.to_excel("competitor_keyword_similarity2.xlsx")

Keyword analysis using cosine similarity

So what I basically always do is search for something in my niche, such as “SEO services in Kenya”, on Google and get the top-ranking websites for this phrase. I then collect the exact URLs that were ranked, maybe the top 5 or 10, because that is where you want to be.

You can also use other tools such as Semrush to get these URLs, but having your primary data is always better.

With the URLs available, just import them into the script and generate a full list of keywords with the cosine similarity calculated between each other.

Your job now is only to sort the ones that have high cosine similarity and use them to optimize your page.

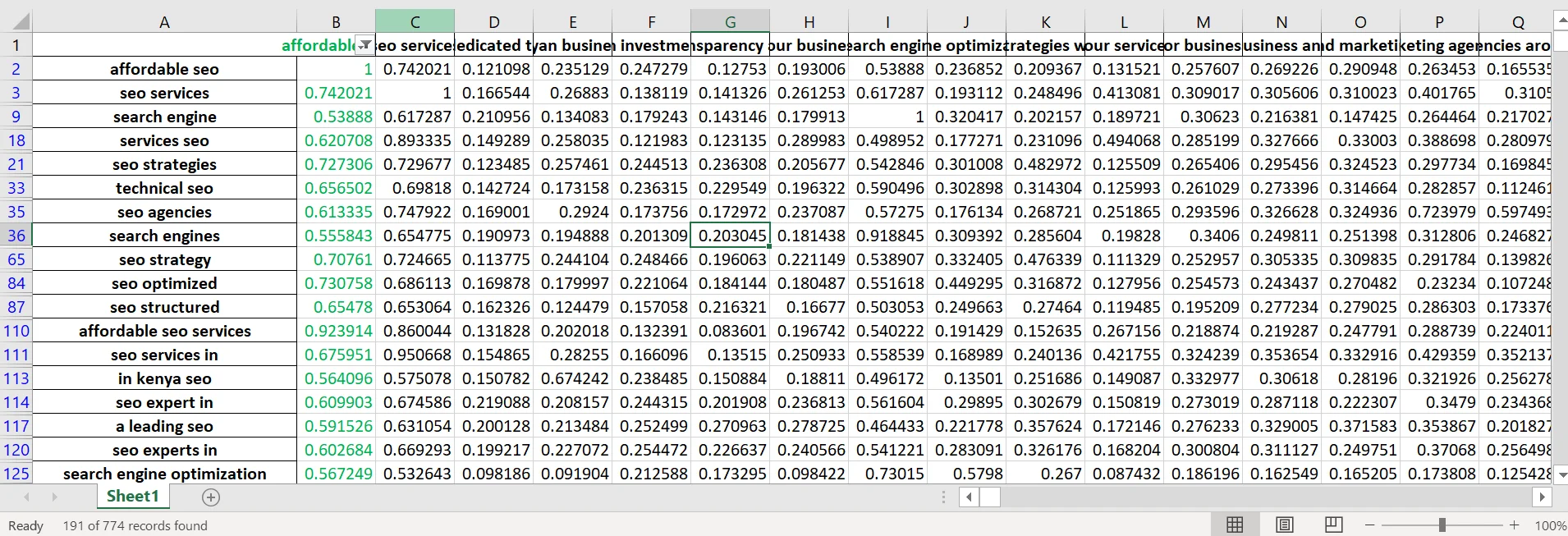

For example, I got 774 keywords from three competitors, and when I chose the one I thought was relevant to my page, i.e., “affordable SEO,” and filtered with the criteria of related keywords having a cosine similarity greater than or equal to 0.5, I ended up with just 191 words that I can use on the same page.

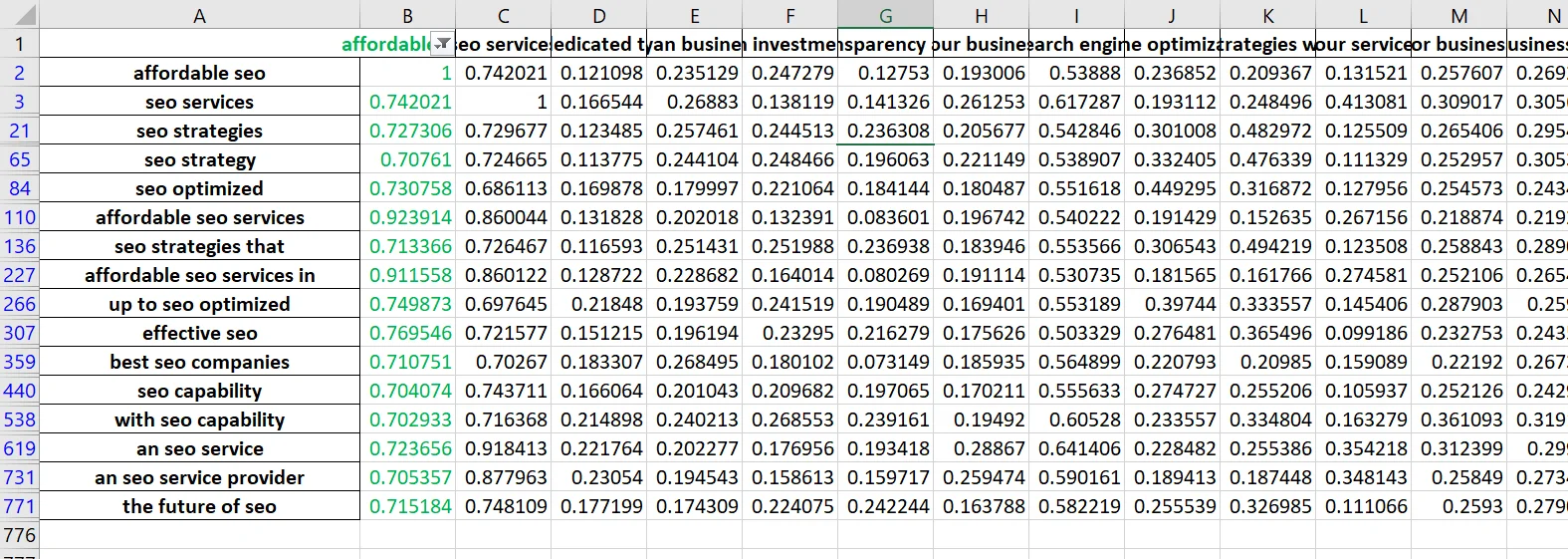

I can reset my filter criteria and get only those that are much closely related e.g greater or equal to 0.7 cosine similarity and have 16 keywords that will have similar search intent ranking.

Hopefully I am not the one finding this interesting as a math nerd, I hope you find it helpful and time saving as well cheer!

Francis

Author